About me

(The site is under construction! Sorry for (temporarily) limited information.)

I am a postdoctoral researcher at CEITEC with interests in technical AI safety, security, and the science of deep learning. I hold a PhD in applied physics and have worked on interpretable machine learning for spectroscopy as well as foundational aspects of deep learning. I also collaborate with KASL and was previously a visiting Ph.D. student at the University of Cambridge, advised by David Krueger.

My current research focuses on foundational topics in machine learning, such as loss-landscape geometry, parameter space symmetries, mode connectivity, and overparameterization, with the broader aim of advancing AI security and safety. I combine empirical and theoretical approaches, often grounded in physics, to better understand deep learning and improve its interpretability and robustness.

When I’m not busy with ML experiments, you can find me bouldering or cycling. I also enjoy hiking, playing guitar, and reading physics books from my vast collection.

News

Oct. 2025: I gave a talk on Mode Connectivity for AI Security&Safety at the Oxford AI Safety Initiative’s technical roundtable seminar.

July 2025: I am joining the Artificial Intelligence Governance Initiative (AIGI) at the Oxford University as a Visiting Research Fellow for three months to work on automated interpretability (with Fazl Barez).

Jan. 2025: Input space mode connectivity was accepted to ICLR 2025.

Oct. 2024: Input space mode connectivity was accepted for an oral presentation at SciForDL at NeurIPS 2024.

Aug. 2024: I am attending the IAIFI summer school and workshop at MIT, where I will give a talk on input space mode connectivity.

June 2024: I am visiting KASL $\subset$ CBL, University of Cambridge for four months.

May 2024: I will be at Youth in High Dimensions workshop at ICTP in Trieste, Italy.

Research interests

- Machine learning foundations

- overparametrization, double descent, NTK

- loss-landscape symmetries, mode connectivity

- sparsity, lottery tickets

- ANN interpretability (for spectroscopic data)

- feature visualization, optimal manifold

- sparsity for (mechanistic) interpretability

- custom loss penalization

- AI safety

- LLM jailbreaking (defenses)

Current projects

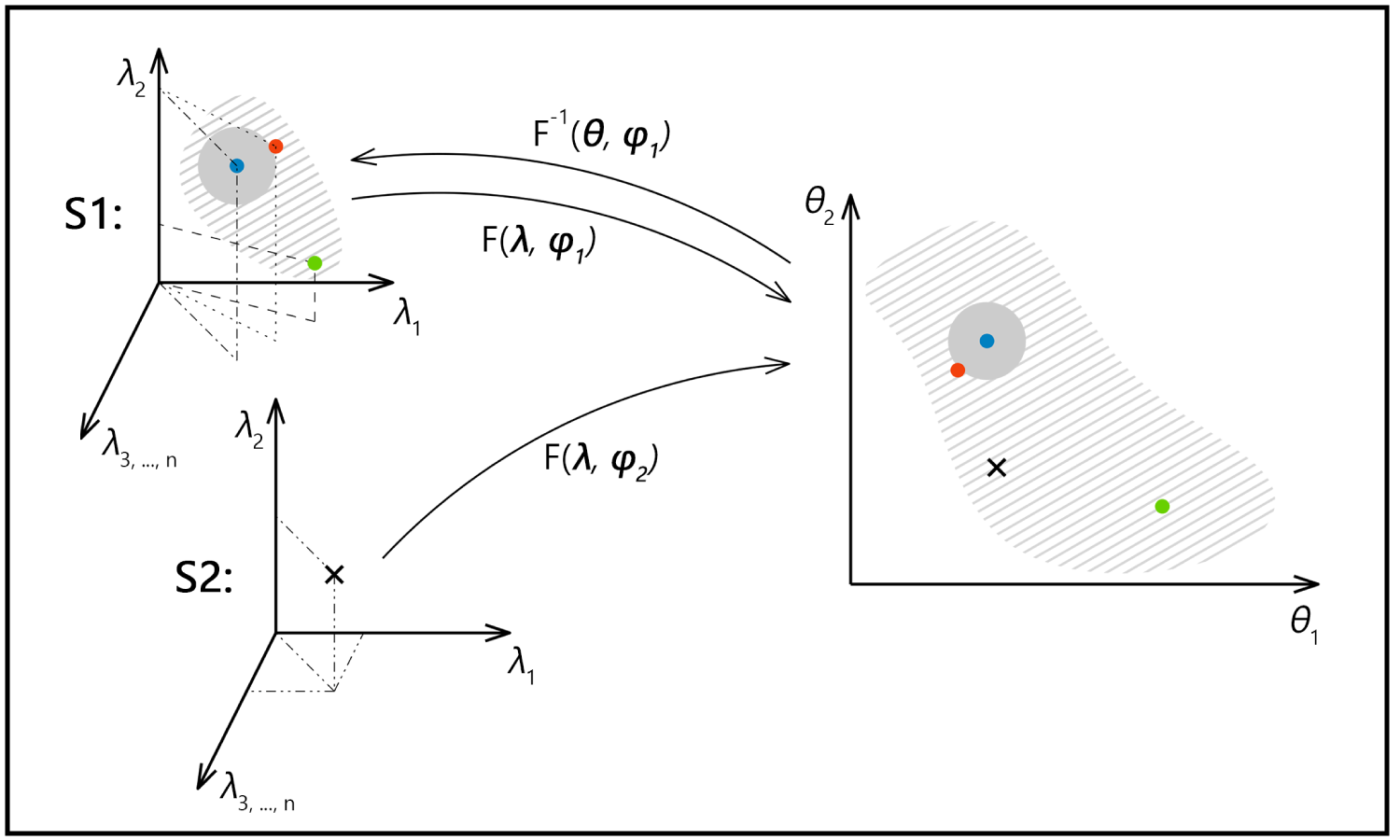

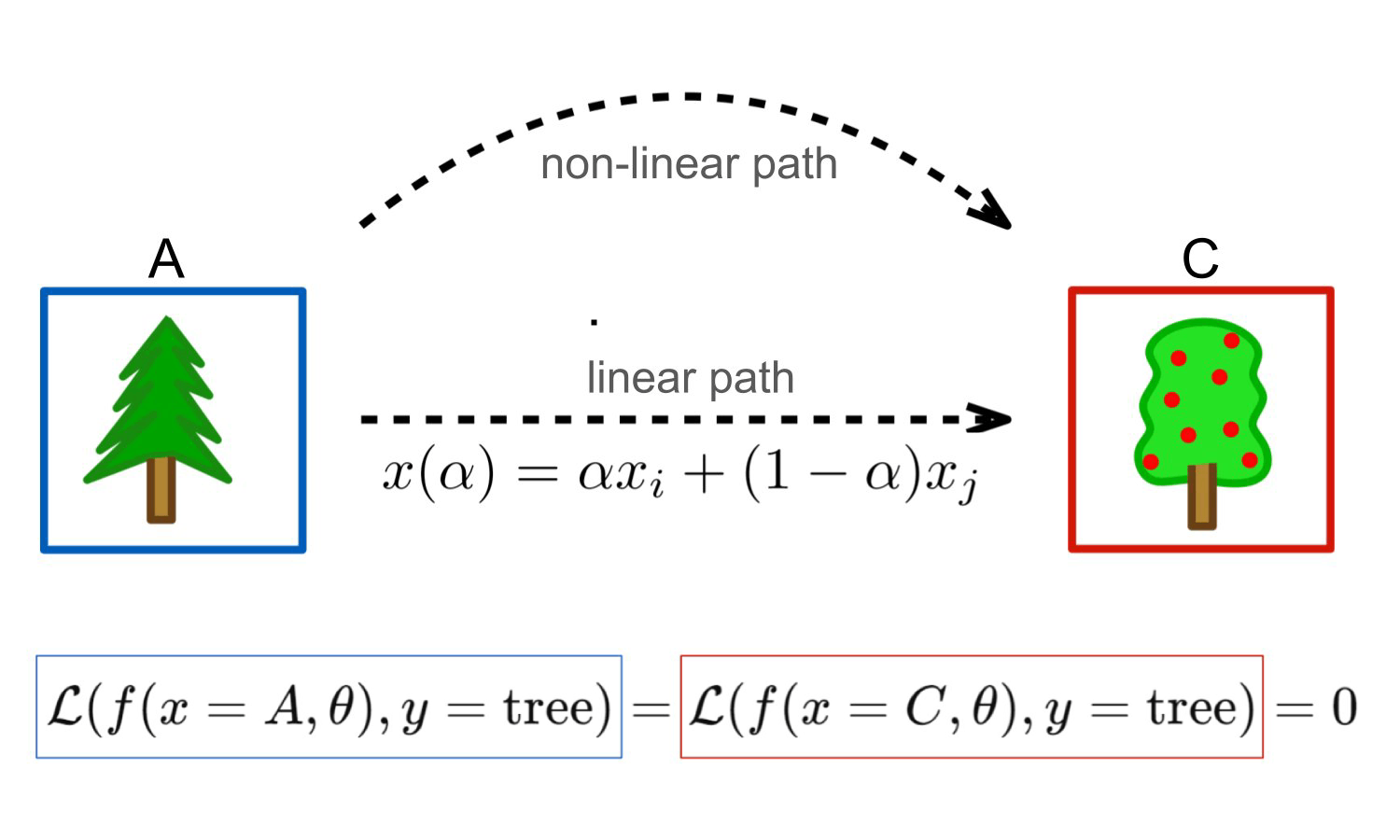

Input space mode connectivity

We generalized the concept of loss landscape mode connectivity to the input space of deep neural networks.

ICLR | arXiv | Talk (D. Krueger)

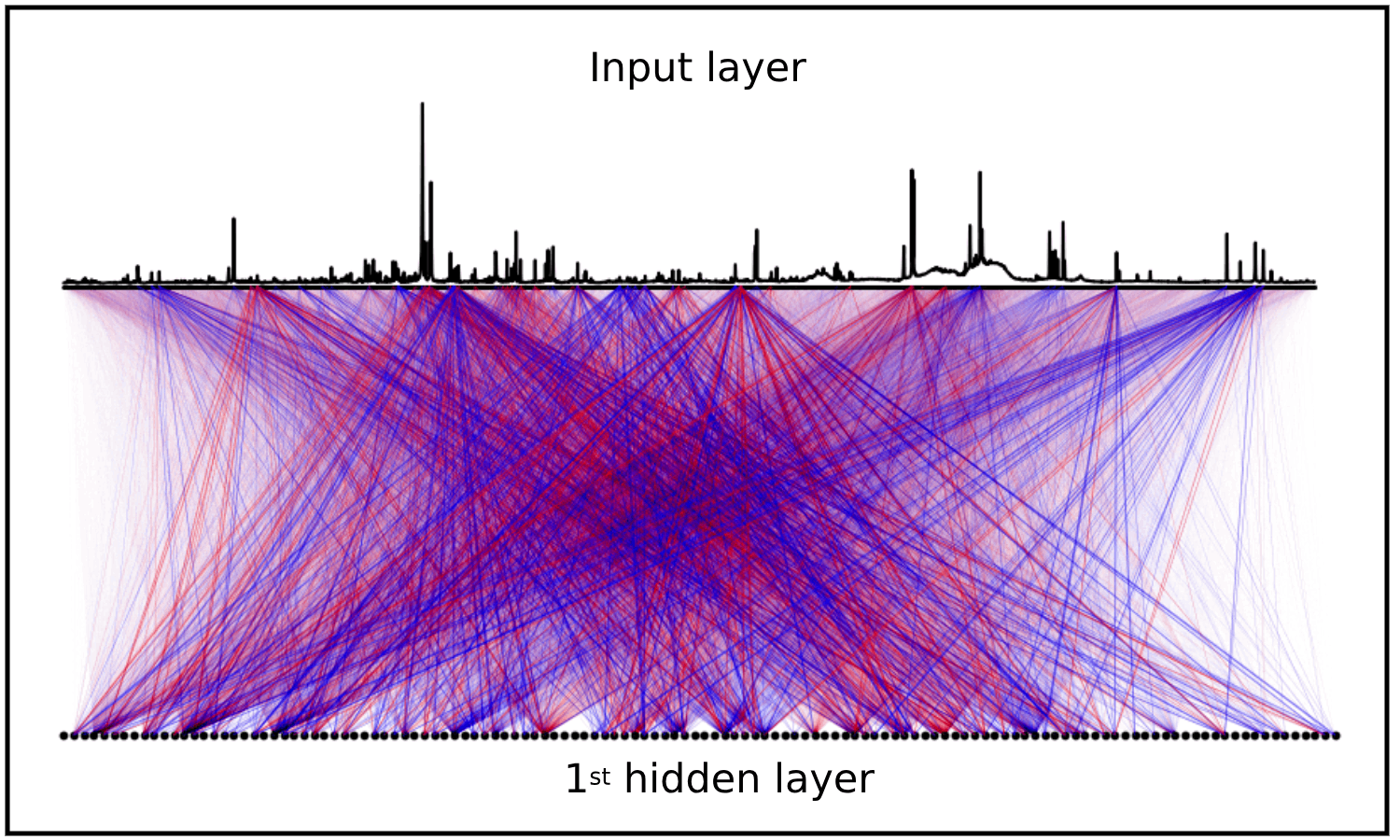

Sparse, interpretable ANNs for spectroscopic data

We study custom loss penalization for MLP that leads to interpretable and spectroscopically relevant weights in the first layer.

Code

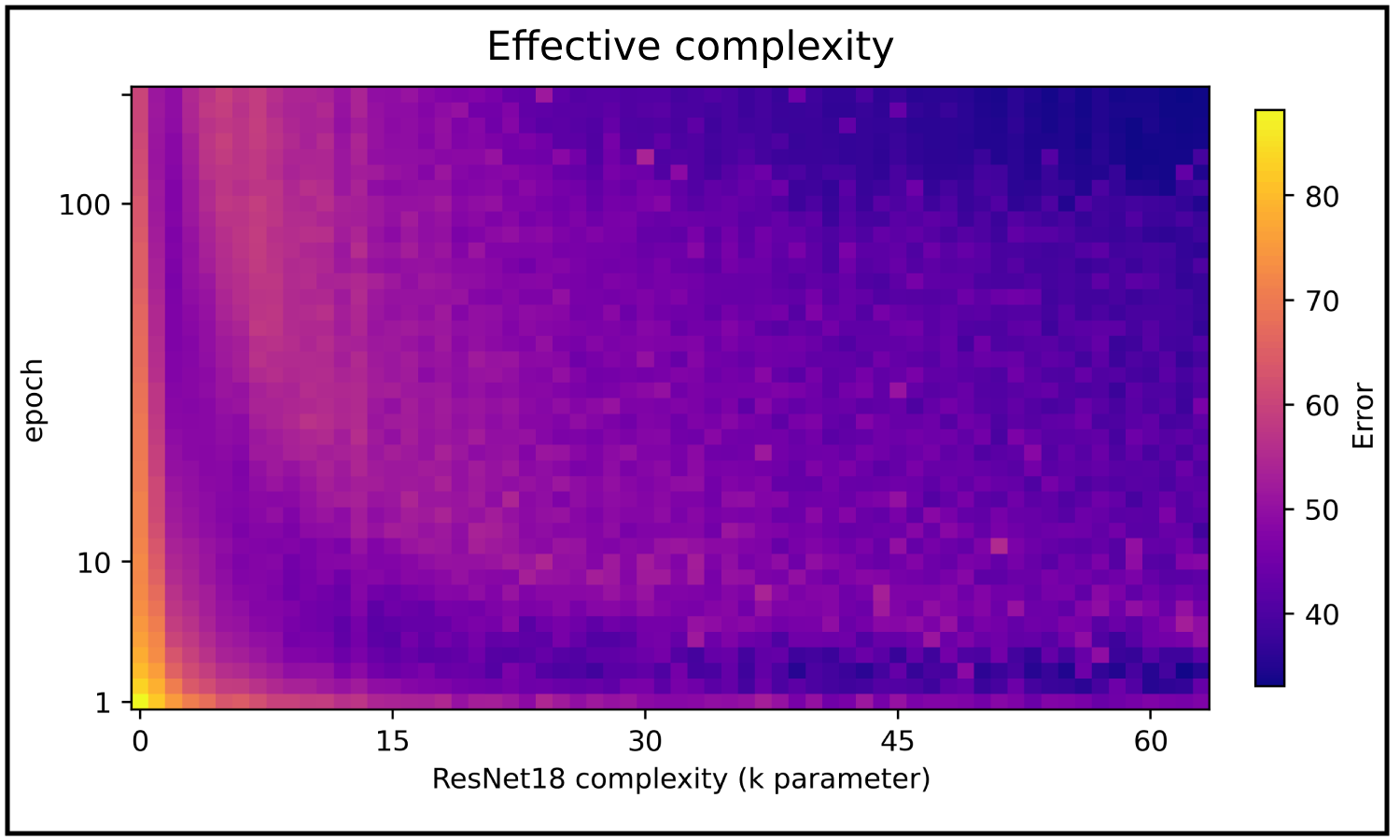

Lottery tickets vs. double descent

In this solo project I study intrinsic limitations of lottery ticket performances that depends on the initial effective complexity.

Selected past projects